On disciplinary societies, technology of surveillance and the biopolitics of tomorrow: how Israel is battletesting the Palestinian land

Power, wrote the philosopher Michel Foucault in his Discipline and Punish (1976), is directly articulated in time: it ensures its control and guarantees its use. To this, two fundamental dimensions must be added: space, often narrow, and visibility control. And Foucault writes again: the force of discipline is exercised through invisibility.

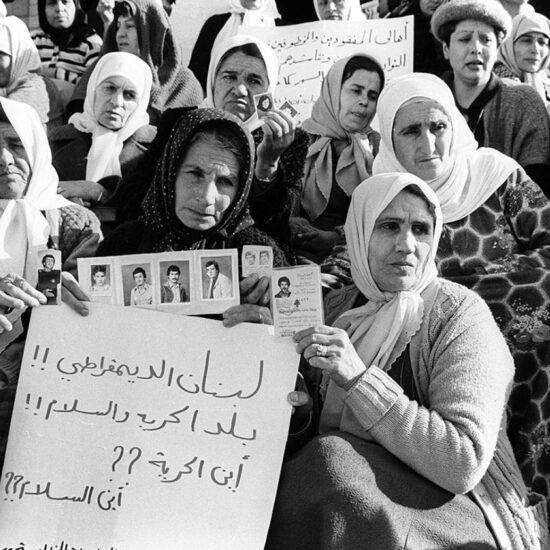

Fifty years after that publication, during the first genocide committed in the days of artificial intelligence, the Israeli disciplinary society can rely on a system of surveillance technology of unprecedented accuracy – along with the complicity of the Western world. An array of highly-developed tools made it possible not only to complete, but mainly to maintain the occupation for almost sixty years. Smart walls, facial recognition technology, biometric data, drones, are all tools used to control Palestinians, to divide, surveil, and ultimately punish them.

Reflecting on the birth of prisons as punitive infrastructures alternative to public flogging – always based on the biopolitical management of criminal bodies, but on their segregation, rather than on torture performance – Foucault drew on Jeremy Bentham’s figure of the Panopticon: placing its simple and brutal architecture in relation to the concept of power and surveillance, that is, knowledge. Power is knowing, at all times and in all places, what your target is doing, the percentage of risk that can be run for its elimination; but also, it is taking away the adversary’s access to the field of visibility: so that, if the victim is certainly, narrowly identified, the executioner will not be, diluted in the nebulous complicity of an acronym – IDF – impossible to be held accountable for his crime.

We know the principle on which the Panopticon was based: at the periphery, an annular building; at the centre, a tower; this tower is pierced with wide windows that open onto the inner side of the ring; the peripheric building is divided into cells, each of which extends the whole width of the building; they have two windows, one on the inside, corresponding to the windows of the tower; the other, on the outside, allows the light to cross the cell from one end to the other. All that is needed, then, is to place a supervisor in a central tower and to shut up in each cell a madman, a patient, a condemned man, a worker, or a schoolboy. Any Palestinian. By the effect of backlighting, one can observe from the tower, standing out precisely against the light, the small captive shadows in the cells of the periphery.

The Panopticon, thus described, is a machine for dissociating the seeing-being seen pair: in the peripheral ring one is totally seen, without ever seeing; in the central tower, you see everything, without ever being seen. Fifty years later – even though the technology has changed – the ideological principles of surveillance remain the same. As well as the effects on the monitored and punished bodies: the monitored, punished bodies of Palestinians.

Visible and unverifiable

This permanent visibility became a way to exercise power in what Foucault described as ‘disciplinary societies’ – and what Israel defines as ‘security measures’. In so doing, the Israeli-inspector induces in the Palestinian-inmate a state of conscious perceptibility. Power, therefore, even in the age of artificial intelligence, is always visible and unverifiable.

As visible, the inmate will constantly have before his eyes the tall outline of the central tower from which he is spied upon; as unverifiable, the inmate must never know whether he is being looked at at any one moment: yet, he must be sure that he may always be so. No need for force: pure observation is enough, according to Foucault, to “constrain the convict to good behaviour, the madman to calm, the worker to work, the schoolboy to application, the patient to observation of the regulations.” The Palestinian – to surrender.

Theoretically, he who is subjected to a field of visibility, and who knows it, assumes responsibility for the constraints of power; he makes them play spontaneously upon himself; he inscribes in himself the power relation in which he simultaneously plays both roles; he becomes the principle of his own subjection.

As such, the monitored body comes to exercise self-restraint. Because it does not matter who occupies the watchtower – who, in today’s Gaza, remotely controls the drone – or even whether it is occupied, automatically driven: power belongs to no-one; it functions automatically and is constantly present through the piercing gaze of the watchtower. The Panopticon is a terrific instrument of surveillance – the very few bearing a constant, watchful eye on the every movement of the many. As Gaza teaches.

Panopticon 2.0

The list of Israeli spyware devices and technologies of surveillance – mainly aimed at the biopolitical control of Palestinian bodies and at the suppression of dissent – is long and alarming.

Pegasus, for example, an extremely sophisticated Israeli spyware, made by the NSO Group, allows governmental departments around the world to capture all contents of one’s mobile phone. Even if turned off, the spyware would still have access to the phone’s microphone and camera, photos, or emails: a 2.0 version of surveillance, portable in a pocket, yet only the tip of the iceberg of what Israel has been doing in Palestine for decades. Technologies of surveillance have reportedly been trialled in Palestine, battle tested on Palestinians.

The same ideological alignment unfolded between repressive and controlling regimes, in apartheid South Africa, Pinochet’s Chile, in 1994’s Rwanda, or more recently in Myanmar during the Rohingya’s genocide, or in ultranationalist Modi’s India, has been now brought into a new dimension: beyond the mere weapon sales that connect these countries with the Zionist state, at the exordium of the 21st century, Israel has become a key global inspiration of ethno-nationalism exercised by coercion through AI.

Many of the aforementioned cases, along with Saudi Arabia, Bangladesh, the UAE, and even the EU, with its Border and Coast Guard Agency Frontex, have bought spyware from Israel extensively: to control dissent, minorities, or the so-called ‘migrant surge’ of 2015: the eye in the sky, watching what is happening on water. The same border industrial complex which rules over the US-Mexico border, in the form of surveillance towers made by Elbit, Israel’s leading defence company, a high-tech digital wall firstly tested in Palestine, across the West Bank and along the Israel-Gaza border.

Just to mention another of its tools, a recent joint investigation by Israeli left-wing news and opinion online magazine +972 and a Hebrew-language news site Local Call exposed the existence of an AI-based program named ‘Lavender,’ developed by the Israeli army for identifying human targets. Lavender, kept under wraps until a couple of weeks ago, is reported to have played a significant role in the targeting of Palestinians: according to the sources, its influence on the military’s operations was such that they essentially treated the outputs of the AI machine “as if it were a human decision.”

During the early stages of the onslaught on Gaza, the army gave sweeping approval for officers to adopt Lavender’s kill lists, with no requirement to thoroughly check why the machine made those choices or to examine the raw intelligence data on which they were based. The investigation showed that human personnel often served only as a ‘rubber stamp’ for the machine’s decisions, personally devoting about twenty seconds to each target before authorising a bombing: to make sure the Lavender-marked target is male. Twenty seconds – the portion of time which became sufficient, to humans, to let the machine take control of life and death, despite knowing that the system makes errors in approximately 10 percent of cases, and is known to occasionally mark individuals who have merely a loose connection to militant groups, or no connection at all. The result: that thousands of Palestinians, most of them women and children, or people who were not involved in the fighting, were wiped out by Israeli airstrikes because of the AI program’s decisions.

The list could continue: an additional automated system, called ‘Where’s Daddy?’, was used specifically to attack the targeted individuals while they were in their homes – usually at night while their whole families were present – rather than during the course of military activity. Because, machinely speaking, it is easier to locate the individuals in their private houses, while the military’s preference for unguided missiles over precision bombs, the latter being extremely more expensive, caused the collapse of entire buildings. ‘Collateral damage’ – as they call it. Preventively, the military authorised the killing of up to 15 or 20 civilians for every junior Hamas operative marked by Lavender: and up to 100 for a senior official. ‘The right to self defence’ – as they call it.

Philosophy after Gaza

In the introduction to his intervention on Tuesday, April 16, AUB Professor Harry Halpin, co-founder of Nym – a startup building a decentralised mixnet to end mass surveillance – questioned the future of philosophy, if any, after Gaza. Quoting Theodor Adorno saying “it is barbaric to write poetry after Auschwitz,” Halpin aligned the Holocaust and the ongoing genocide of Gazans as moments where the final stage of the dialectics of culture and barbarism takes place. In other words, being trapped in a moment of what he described as “purely negative,” the role of philosophers has ended – replaced by the jargon of authenticity, the search for truth.

Not only. What Gaza is showing is that the industrialisation of murder realised by the Nazi Germany – not only against Jews, but targeting other ethnic minorities, as well as political opponents – has reached a further stage: that of artificial intelligence. The massive killing machine perfected in the last century, far from being abolished, has been dispersed. Its technologies – available on the market.

However, it would be misleading – and fostering Israeli narratives – to believe in the pure automatisation of mass killing. Behind the machine, even if just for a bunch of seconds – there’s a human. As industrial technology transforms into a universalizing cybernetics, the foretold enframing of the world by AI continues to lead to barbarism in present day Palestine. From the use of artificial intelligence to guided attacks claiming ‘false positive rate’ of civilian deaths to mass surveillance enabled by Google and Amazon – all falsely displace human agency with AI.

Dehumanising responsibilities risks absolving responsible people from committing very real crimes. To the point that we are humanising the machines, believing them accountable, and objectifying the people of Gaza – just as expendable machines.

By critiquing this perversion, Halpin stated, philosophy can re-establish its political character, a character so desperately missing as the deaths of Palestinians becomes normalised. Yet halting the genocide in Gaza requires more than the weak powers of complaint; it requires creating new forms of politics and technology as a foundation for global revolution.

Two ways

Long story short, there are two ways to look at the Palestine laboratory. The first: whether it is really the testing ground for the wars of the future; if the checkpoints of the West Bank are the perfecting ground for increasingly sophisticated walls – and Gaza the mirror of what massive destruction will mean from now on, then the Israeli military-industrial complex, which for sixty years has been using the illegal occupation as a laboratory for weapons and surveillance technologies, will continue to export them all over the world, fueling conflicts, violence and abuse, with impunity: the margin for error decreasing as the destructive power increases. And the atrocity of collateral damage finding us anaesthetised, appeased, ready for its silent acceptance.

But if the Palestine laboratory is also an example of resistance to surveillance and punishment; if the self-regulation envisaged by the Panopticon on Gaza fails, and the Palestinian people do not allow themselves to be subjugated, and struggle to resist, then there is, perhaps, another experiment underway: counter-directional, of refusal of chains and walls, to which the resistance to the occupations of tomorrow will watch, to get inspired.